UX research has never been a fixed discipline.

But in 2025, it is being reshaped by simulation.

Synthetic users, predictive journey modelling, and AI-based empathy mapping are present, functional, and, in some cases, already integrated into design cycles.

Here’s everything you need to know about it: ⬇️

📌 What’s Inside

- What synthetic users actually are and what they are not

- How AI is affecting field studies and behavioural modelling

- The risks of simulated empathy

- Real-world use cases from product teams

🧠What synthetic users actually are and what they are not

Synthetic users refer to AI-generated simulations of human behaviour.

Entities that interact with interfaces based on predictive modelling of human input.

These users are built using a combination of behavioural data, task patterns, NLP inference, and system logic.

They are not personas in the traditional sense, but rather, they are software agents trained to navigate flows the way a typical user statistically might.

Tools like OpenAI’s synthetic user frameworks and IBM’s Predictive UX already offer UX teams the ability to run simulations at scale.

They use historical journey data, eye-tracking archives, interaction heatmaps, cognitive load metrics, and decision trees to train agents that approximate real usage.

Some platforms even integrate speech synthesis and language generation to emulate user commentary.

These systems function through a layered architecture.

At the foundation are interaction models, which are rule-based structures that define how a synthetic user behaves when encountering various UI elements.

These are typically informed by large datasets of prior user behaviour.

On top of that, machine learning layers, which are usually a mix of supervised and reinforcement learning, adjust behaviour over time.

These systems assign probability weights to likely user paths, flag deviations from norm flows, and simulate hesitation or abandonment when friction metrics cross predefined thresholds.

Another key component is generative cognition.

Using large language models, platforms simulate natural-language feedback that mirrors how real users express confusion, dissatisfaction, or approval.

These generative agents are prompted with scenario-based contexts and UI stimuli to return text outputs, which can then be parsed using sentiment analysis.

Some models, such as those being developed in collaboration with Stanford’s HCI lab, even attempt to simulate user goals and mental models across iterative flows.

What makes synthetic research so interesting is the ability to re-run scenarios under altered conditions: different button placements, content rewrites, etc, and immediately observe how predicted user behaviours change.

That kind of testing granularity was once reserved for post-launch analytics or large-scale A/B deployments.

Now, it can happen during design sprints.

The mechanism is worth understanding.

A 2024 paper from the University of Michigan’s Interaction Lab compared synthetic usability simulations to traditional human-based studies, concluding that synthetic agents excel in identifying friction patterns but lack contextual modulation found in live participant feedback.

Synthetic insight has a dual nature: it is valuable in identifying repeatable friction but limited in interpreting nuance.

Yet this limitation does not diminish their value.

At CHI 2025, a Stanford-led panel on human-AI research stressed that synthetic participants are valuable for rapid iteration, yet must be complemented by direct human feedback.

That role may be the most honest and useful one these models can serve in our current practice.

🤖How AI is affecting field studies and behavioural modelling

Most synthetic user models rely on supervised learning: they are trained on known datasets, with outcomes labelled by humans.

The better the data quality, the more precise the simulation.

Some systems now also incorporate reinforcement learning, where agents “learn” optimal behaviours through trial, error, and outcome ranking.

And increasingly, platforms are adding large language models that mimic how real users describe frustrations or confusion in plain language.

But despite these advancements, as you can imagine, the underlying challenge is generalisation.

Human cognition is influenced by memory, mood, culture, and context.

A synthetic user might know that a form field takes too long to complete, but not that a caregiver trying to enter insurance information while managing a sick child will likely abandon the form altogether.

⚠️The risks of simulated empathy

At the end of the day, no synthetic user can ask, “Wait, what does this mean?” out loud.

No AI can pause out of discomfort, hedge its feedback, or choose silence instead of a response.

Those subtle, often inconvenient signals are where some of the richest insights in UX still originate.

The power of synthetic research is scale, but the power of traditional human research is surprise.

It is not always about quantifiable reactions.

Sometimes, it is about the look on a participant’s face when a screen does not behave as expected. Or the language they choose when describing a failure is often loaded with emotion and affect.

Even advanced language models trained on massive datasets cannot replicate real-time social interaction.

After all, a user speaking to a researcher may adjust their tone just to be polite, to test a boundary, or to seek reassurance.

Synthetic users will always execute tasks as they are programmed to.

But humans do not operate from logic alone.

Behavioural economics, affective neuroscience, and cognitive psychology all support this.

We make decisions based on emotion, expectation, trust, and identity.

We hesitate not because the interface is broken, but because something feels off. That feeling has no reliable proxy.

This does not mean synthetic research lacks value.

It can test hypotheses. It can significantly compress timelines. And let’s be honest, working in fast-paced tech environments often means timelines are tight, and research is usually the first thing to be deprioritised.

In that context, synthetic research offers a meaningful advantage.

But it cannot (yet) replicate what it means to be disoriented, to second-guess, to fail with a sense of consequence.

Human-centred research still holds the interpretive edge.

It detects discomfort through posture. It uncovers misalignment not through predicted misclicks, but through lived confusion.

And often, the most actionable insights emerge from what a participant says when the session is “over.”

And yet, to frame synthetic research as lacking insight is only part of the truth.

In many ways, it may end up reshaping how and how well we conduct research.

Synthetic users can explore paths we never thought to test.

They can simulate rare use cases and edge flows at scale. They can act as an early signal, surfacing usability red flags before any user is even involved.

For product teams under pressure to ship fast, this is a major advantage.

When integrated thoughtfully, synthetic agents can highlight what deserves human attention. They act as good filters. The result is prioritisation in service of depth.

In some domains, they may even outperform traditional methods.

Synthetic research can be particularly powerful in high-sensitivity contexts where testing with real users is ethically complex or impractical.

Accessibility audits or security-sensitive flows, among others, are areas where synthetic agents can test assumptions without real-world cost.

🧪 Real-world use cases from product teams

Some of the most promising work in this area attempts to hybridise methods.

For example, in April this year, Adobe’s research team presented at Figma Config a talk titled “Hybrid Testing: Synthetic Journeys and Human Validation.”

They described:

“We spin up thousands of synthetic click-paths to expose edge-case breakdowns, then bring in targeted participants to unpack the anomalies.”

This makes sense.

Most synthetic models do not factor in socioemotional nuance.

Nor do they handle contradiction well, for instance, users who say one thing and do another (a well-documented occurrence in UX research).

Furthermore, because many tools are trained on digital-native user patterns, they risk marginalising users with less exposure to standard conventions.

These omissions raise questions about how and when we use them.

From a theoretical lens, the use of synthetic users mirrors simulation theory in cognitive science, where predicting another’s behaviour is modelled through constructed approximations.

But simulation is not the same as experience.

The field of affective computing continues to show that machine-inferred emotion is not equivalent to felt emotion.

That distinction matters when the goal of research is to understand how people feel, not just what they do.

So does it work?

The answer depends on what “work” means. If the goal is speed and breadth, synthetic users excel.

If the goal is empathy and unpredictability, perhaps not so much.

And maybe this is the crux: synthetic users can be excellent scouts, but they are poor ethnographers.

They help map the terrain but cannot explain the weather.

We are beginning to recognise this.

And so, the most adaptive teams are integrating synthetic testing not as a replacement for human research, but as a filtering tool, acknowledging the strengths of each method without collapsing one into the other.

There is also a broader cultural question.

As AI-generated research becomes more common, will the field start optimising for what machines can measure?

If that happens, we risk designing for certainty instead of meaning. We risk overfitting our products to simulated truths.

Synthetic research is most definitely a change.

AI systems are becoming more clever, more precise, and more adaptive by the month.

To ignore them is to discard a growing advantage.

The question is not whether we use them but where we use them.

Synthetic users may not yet replace rich qualitative interviews, but they can complement them.

They can prototype behavioural insight. They can shape early hypotheses. They can act as fast scouts across uncertain terrain.

Perhaps the future of UX research lies not in picking one side or the other but merging them together.

Allow machines to explore what they are best at: scale, consistency and repeatability, and let people guide where judgment and care are required.

Subscribe on Substack⬇️

If you’ve found this content valuable, here’s how you can show your support.⬇️❤️

You might also like:

📚 Sources & Further Reading

- NNG: Synthetic Users: If, When, and How to Use AI-Generated “Research”

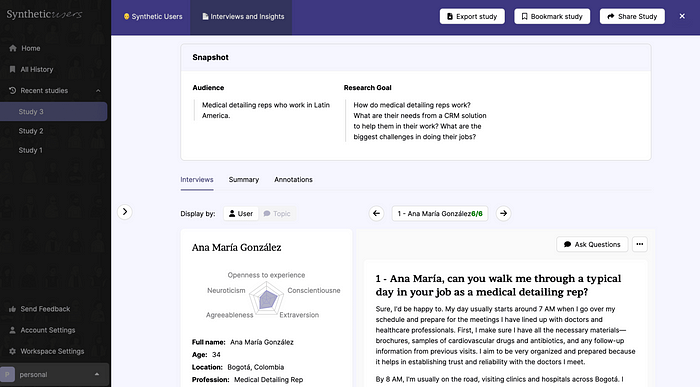

- Delve.ai: Are Synthetic Personas the New Normal of User Research?

- University of Michigan: Free Lunch for User Experience: Crowdsourcing Agents for User Studies

- (Recording: Adobe Design, “Hybrid Testing: Synthetic Journeys and Human Validation,” Config 25, 15 April 2025) (internal recording, Config 25 — not publicly archived)

Share this article: