hy do users drop off at the crucial moments?

It is often down to the fact that journeys get shaped around what is buildable rather than what is usable.

The paths users take are too often reverse-engineered from either backend logic or business constraints.

But a good UX audit interrogates their very existence.

It asks who the design is really serving.

It pulls at the loose threads of every screen to see what unravels…decisions no longer tied to user value or designs produced more to impress than to focus on meaning.

These are usually the signals that the product has been optimised for organisational comfort, not user clarity.

Audits should start with discomfort.

This is critical because meaningful UX work hinges on confronting whether the product still serves its original purpose or has drifted into habit.

It requires us to step back, not to admire what we’ve built, but to ask whether it still makes sense for the user’s lived reality.

Because if it no longer serves a valid need, no amount of surface refinement will redeem a product built on outdated assumptions.

Polishing an obsolete flow only delays the inevitable: users disengaging from something that should have been rethought entirely.

Good UX is rarely about momentum. It’s about pause, resistance, and deliberate realignment.

Because if a flow still exists only because it’s been too expensive to challenge, it is not a journey. It is inertia.

The audit’s job is to surface that uncomfortable truth, not decorate around it.

📌 What’s Inside

- How to identify journey mismatches

- Numbers alone don’t reveal the truth

- When pacing falls out of sync

- AI can help, but watch the hand that feeds it

- How to structure an effective audit

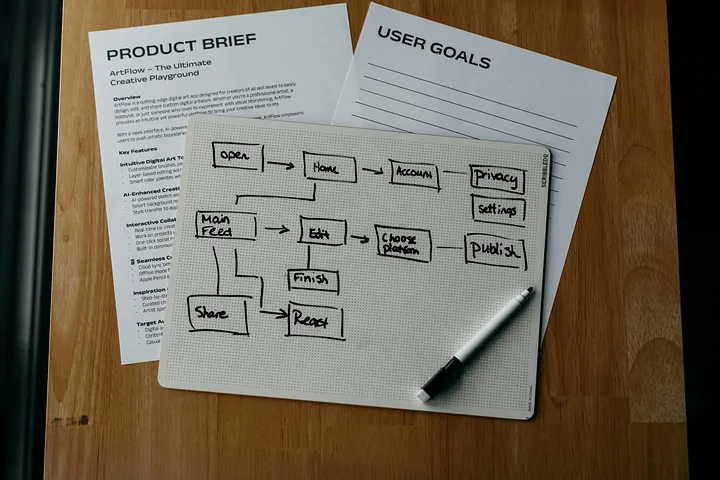

🔍How to identify journey mismatches

What makes a good journey?

Not flowcharts. Not screens stitched together in a prototype. But the invisible logic of user behaviour: pacing, hesitation, mental model matching.

Too many audits stop at visual and IA checklists. They evaluate colours, hierarchy, spacing but sometimes skip over the awkward questions:

- Does the screen show up when the user needs it?

- Is this task even part of their actual workflow?

- Are we solving their goal, or forcing them through ours?

A common audit failure is mistaking visual coherence for user clarity.

Screens might appear consistent, and intuitive yet still cause user hesitation.

This is especially true when language, hierarchy, or micro-interactions fail to reflect the user’s mental model.

For instance, permissions, confirmation states, or task outcomes that are ambiguous in meaning can cause users to pause, backtrack, or abandon their flows entirely.

These are not failures of interface but failures of alignment between system logic and user expectation.

The confusion is behavioural and contextual, not visual.

To identify it, we have to stop looking at the screen and start looking at the user’s hesitation.

Look for where they pause unexpectedly. Where they re-read. Where they hover without clicking. These are signals of doubt, not direction.

Behavioural gaps often reveal themselves in mismatched expectations: when users act with one mental model and the product responds with another.

To uncover these, it’s helpful to watch for points of friction that traditional analytics overlook.

For instance, replaying session videos and isolating moments where users hesitate or repeat actions, or checking with customer support tickets that indicate confusion, surprise, or unmet expectations.

Or even looking at search queries within a product; often, users type what they expect to find, not what you labelled it as.

And the abandoned tasks, of course. That is the purest signal of a behavioural gap, when the user stops, not because of resistance, but because the experience failed to validate their next step.

Observation (real observation, not heatmaps alone) is the only way to surface these mismatches.

You will not find them in the design file. You will find them the moment someone clicks, waits, and asks themselves, “Is this doing what I think it’s doing?”

🔢Numbers alone don’t reveal the truth

Audits often chase the wrong indicators. Bounce rates, time-on-page, conversion.

But…

“analytics tell you where users struggle, not why.”

You see drop-off at step three of your onboarding, so you redesign the tooltip. But did anyone stop to ask whether the step should exist at all?

An interesting case study: Monzo, a UK-based digital bank, once required new users to complete full KYC verification, including a passport scan and video recording, before they could explore in-app features like budgeting tools or transaction categorisation.

According to user feedback, this created a premature barrier.

Post-audit usability sessions revealed that users were not confused, they actively resisted the flow, expecting to preview functionality before committing personal data.

This resistance was behavioural, not instructional.

Post-audit interviews showed users did not drop off from confusion but in protest.

The real issue was a mismatch between business logic and user pacing.

Business needs (such as verifying identity upfront in this case to reduce fraud risk) collided with users’ natural desire to explore the product before committing sensitive information.

Navigating this sort of tension requires structural negotiation: dissecting which requirements are non-negotiable and which are habits disguised as policy.

Introducing progressive disclosure, sandboxed previews, or delayed authentication checkpoints can preserve business goals without compromising user momentum.

But the deeper work is cultural: aligning product priorities with behavioural truths, not internal assumptions.

It means asking whether what has been deemed ‘critical’ by the business is genuinely rooted in legal, regulatory, or user-centred imperatives, or whether it simply reflects internal habits or institutional inertia.

Sometimes, process rigidity is simply disguised as necessity.

We thus must create deliberate moments for negotiation between compliance, design, research, and product leadership.

Ultimately, every user-facing requirement carries a behavioural cost.

A meaningful audit reframes flows not around what the business prefers to enforce, but around what users are cognitively and emotionally ready to do.

Until organisations allow user behaviour to challenge internal logic, flows will continue to look sound in architecture but collapse in practice.

🛑When pacing falls out of sync

Misaligned pacing is one of the most consistent and least interrogated causes of user abandonment.

It manifests when interfaces demand decisions before users have the clarity or confidence to make them.

In many cases, users are asked to provide commitment (data, time, trust) before even receiving enough context to justify it.

Naturally, the result is hesitation, doubt, and eventually, disengagement.

The challenge with pacing is that it rarely announces itself. Unlike visual issues or performance lags, misaligned pacing lives in silence: the second guess, the unclicked button, the task abandoned mid-flow.

It breaks the natural sequencing users expect and disrupts the mental model they bring into the interaction.

Baymard Institute’s studies reinforce this:

According to their research, 18% of users abandon their purchase due to unnecessary form fields or poor sequencing.

In one study, users were asked to enter full address information before being shown delivery options.

The interface itself worked flawlessly but the order didn’t.

Users expected to preview cost and speed before offering personal details. When that expectation wasn’t met, drop-offs spiked.

Pacing mismatches like these are not edge cases. They are systemic, and they often reflect internal priorities disguised as logical sequences.

Fixing them requires reordering flows around what users need to know before they’re asked to act.

👁️AI can help but watch the hand that feeds it

AI has accelerated how we surface patterns but it hasn’t improved how we interpret them.

Many tools are now capable of delivering rage clicks, exit funnels, and behavioural paths in seconds, but this speed can sometimes be misleading.

Synthetic analytics reflect synthetic priorities.

As the Interaction Design Foundation puts it,

“AI detects patterns, but cannot discern purpose.”

In other words, engagement is not endorsement…it might signal curiosity as well as confusion, or plain friction.

A button frequently clicked could be solving a problem or it could BE the problem.

To use AI meaningfully in UX audits, pairing machine-generated signals with context-rich methods is perhaps the best path forward.

Session replays should be reviewed alongside real-time usability testing. Think-aloud protocols can be transcribed, but must then be interpreted through behavioural frameworks, such as Norman’s Gulf of Execution (more on this next week), to pinpoint where user intent breaks from system response.

The Gulf of Execution clarifies the disconnect between what a user wants to do and what the system allows or communicates.

In audit practice, this means assessing whether the interface makes available actions perceptible and understandable, and whether users can map their goals to those actions.

When users hesitate, misclick, or repeat steps, they are often falling into this gulf.

AI can show us what users are doing.

But only human analysis, rooted in theory and observed behaviour, can explain why they’re doing it, and whether they should be.

📋How to structure an effective audit

A structured UX audit is a sequence of intentional evaluations, each one targeting a different layer of the experience.

It usually begins with evaluating behaviour.

With identifying where the user journey feels smooth and where it hesitates.

Screen recordings are often helpful to map pacing issues, and customer support logs to isolate repeated frustrations.

Then, usability testing comes into play, often revealing mismatched mental models.

From there, it helps to question each flow against user intent: is this step necessary, or inherited? Is it serving the user’s goal, or just fulfilling a business requirement?

Precisely:

- Where do users slow down, and why?

- What questions are they asking that the UI never answers?

- Where do drop-offs happen for valid reasons?

- What assumptions were never tested?

All of these questions will require you to sit with discomfort.

They force us to confront the real structural compromises embedded in a product. But this discomfort is a method.

Because until those uncomfortable truths are surfaced and negotiated, the product remains reactive, not responsive. And no matter how refined the interface looks, a product misaligned with human behaviour will always struggle to hold attention, trust, or value.

“The cost of poor UX is not frustration. It is abandonment.”

And the cost of poor audits, (or lack thereof), is a product that doesn’t evolve, doesn’t serve, and slowly loses relevance.

What’s left is not a failure of execution, but a failure to question the premise. The result is a product that may function, but no longer matters.

“Audits are less about fixing what’s wrong, and more about rediscovering what matters.”

At the end of the day, good journeys start with asking, (again and again) whether each screen belongs in them, in the first place.

Subscribe on Substack⬇️

If you’ve found this content valuable, here’s how you can show your support.⬇️❤️

You might also like:

📚 Sources & Further Reading

- UXMatters: Performing a UX Audit on an Existing Product

- Baymard Institute: Checkout UX Benchmark (2023)

- Interaction Design Foundation: Artificial Intelligence (AI)

- UX Review / Monzo

- Best in class onboarding

- NNG: The Two UX Gulfs: Evaluation and Execution

Share this article: